⦁ Presentación de CVSSv4 en FIRST Conference 2023

https://www.first.org/cvss/v4-0/cvss-v40-presentation.pdf

⦁ CVSS v4.0 Public Preview Documentation & Resources

https://www.first.org/cvss/v4-0/

In this post we review the new version of CVSS (Common Vulnerability Scoring System) which is a key tool for cybersecurity professionals, system administrators, and developers in assessing the severity of vulnerabilities and determining the appropriate response. Here we explore its history, purpose, components, and mention its changes from the previous version.

A brief review

The CVSS is an open standard for assessing the severity of system security vulnerabilities, which proposes a way to capture the main characteristics of a vulnerability and produce a numerical score that reflects its severity and can be translated into a qualitative representation. It is developed by a Special Interest Group (SIG) of the FIRST organization and has undergone several changes over time. Its goal is to be able to communicate and share details about vulnerabilities using a common language, improving collaboration and information sharing among the organizations that make up the cybersecurity ecosystem.

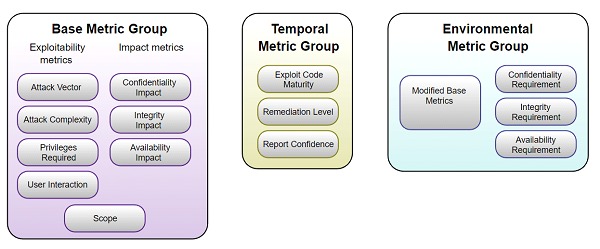

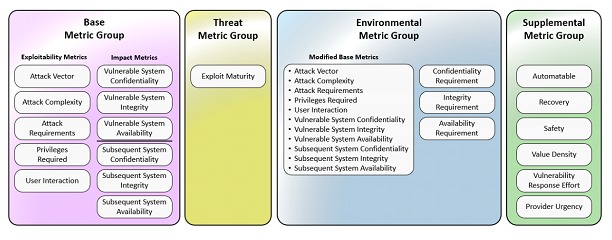

The system consists of three groups of metrics: basemetrics, which are intrinsic characteristics of a vulnerability; temporal metrics, which are characteristics that change over time; and environmental metrics, which are characteristics specific to a certain environment.

A long record

CVSS has had a long journey to reach the current version. It all started in 2003, when the U.S. government's National Infrastructure Advisory Council (NIAC) initiated the research that led to the release of version 1 (CVSSv1) in 2005, with the goal of creating an open and universally standardized severity classification for software vulnerabilities. The fact is that the initial version was not peer reviewed by peers or other organizations, which prevented it from achieving scientific validity. For this reason, NIAC selected FIRST (Forum of Incident Response and Security Teams) for its maintenance and future development.

Feedback from companies and professionals using CVSSv1 in production suggested that it was not adequate, so in the same year of its release, work began on version 2 (CVSSv2), which was published in 2007. However, the community that migrated to using CVSSv2 pointed out the lack of granularity in several metrics, which resulted in scores that did not adequately distinguish vulnerabilities of different types and risk profiles, and required too much knowledge of the impact, which reduced its accuracy. These and other criticisms led to the release of version 3 (CVSSv3) in 2015, after 3 years of work. Everything seemed to be solved, but the continuous improvement had to continue, and CVSSv3 still had weaknesses for the qualification of vulnerabilities in emerging systems such as Internet of Things (IoT) and others, so in 2019 an update was released that conformed version 3.1.

This version, while highly refined and mature, received several very specific criticisms, including that the base score was used as the primary input for risk analysis, that it did not represent sufficient real-time detail on supplementary threats and impacts, that it was only applicable to IT systems (not representing systems such as health, human safety, and industrial control). It was also criticized that scores published by vendors were often high or critical, that granularity was insufficient (less than 99 discrete scores), and that temporal metrics did not effectively influence the final score. As if that were not enough, it was frequently pointed out that the formula and accounts were complicated and counter intuitive.

So, we get to June 2023, when the draft of version 4 was launched for public consultation, which will close on July 31, while the official publication will take place during the last quarter of the year.

New features

Version 4 of CVSS makes substantial changes to the system, so it must be fully understood if you expect to use it. Its new features include those mentioned below.

To emphasize that CVSS is not just the base score, a new nomenclature was adopted to identify combinations of the Base group. These are: CVSS-B for base score, CVSS-BT for base score plus threat score, CVSS-BE for base score plus environmental, and CVSS-BTE for base score plus threat plus environmental.

The temporary metrics group was renamed as threat metrics group, which involves simplified and clarified threat metrics, removal of remediation level (RL) and report confidence (RC) and renaming of "exploit code maturity" as Exploit Maturity (E) with clearer values. The idea is to adjust the Base "reasonable worst case" score by using threat intelligence to reduce the CVSS-BTE score, addressing the concern that many CVSS (Base) scores are too high.

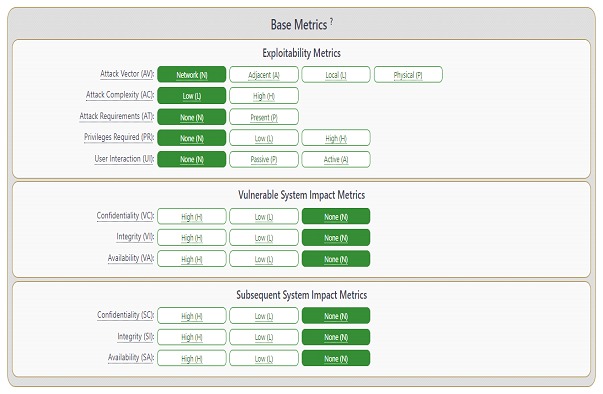

The new "Attack Requirements" metric is intended to solve the problem of the lack of reflection of high complexity conditions in the "low" and "high" complexity values. This is why the definition was split into two metrics, named "Attack Complexity" (AC) and "Attack Requirements" (AT) which respectively convey the following. The first reflects the engineering complexity of the exploit required to evade or circumvent defensive or security enhancing technologies (defensive measures) and the second reflects the preconditions of the vulnerable component that make the attack possible.

The "user interaction" metric was updated to allow for greater granularity when considering a user's interaction with a vulnerable component and has 3 options. None (N) when the system can be exploited with no human user interaction other than the attacker; Passive (P) when the exploitation requires limited interaction by the target user with the vulnerable component and the attacker's payload (unintentional interactions); and Active (A) when the exploitation requires the user to perform specific, conscious interactions.

The "Scope" metric was dropped and is said to have been the least understood metric in history, because it caused inconsistent scoring across product vendors, and implied a "lossy compression" of the impacts of vulnerable systems. As a solution, the impact metrics were expanded into two sets for explicit evaluation: Confidentiality (VC), Integrity (VI), and Availability (VA) of the vulnerable system; and Confidentiality (SC), Integrity (SI), and Availability (SA) of the impacted systems.

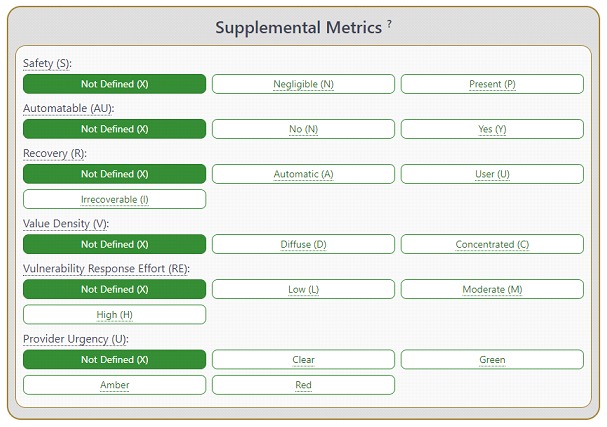

A new set of supplemental metrics was incorporated to convey additional extrinsic attributes of a vulnerability that do not affect the final CVSS-BTE score: Safety (S), Automatable (A), Recoverability (R), Value Density (V), Response Effort (RE), and Provider Urgency (U). The information consumer can use the values of these metrics to take additional actions if desired, applying local importance, with the idea that none defines the numerical impact on the final CVSS score. Organizations can assign importance and/or effective impact of each metric (or set of metrics) by attributing more, less or no effect on the final risk analysis. The metrics and values will simply convey additional extrinsic characteristics of the vulnerability itself.

LThe "Automatable" metric captures the answer to whether an attacker can automate the exploitation of this vulnerability across multiple targets based on the first four of the Kill Chain (reconnaissance, weaponization, delivery and exploitation). For its part, the "Recovery" metric describes the resilience of a component or system to recover services, in terms of performance and availability, after an attack has occurred. “Value density" describes the resources over which the attacker will gain control with a single exploitation event and has two possible values: diffuse and concentrated.

The "Vulnerability Response Effort" metric provides supplementary information on the difficulty for consumers to provide an initial response to the impact of vulnerabilities in their infrastructure products and services. Thus, the consumer can consider this additional information on the effort required when applying mitigations or scheduling remediation. Finally, the "Supplier Urgency" metric seeks to facilitate a standardized method for incorporating an additional assessment provided by the supplier, which can be any actor in the supply chain, although the one closest to the consumer will be in a better position to provide this specific information.

Additional scoring systems were also introduced and adopted to address complementary aspects of vulnerability assessment and patch prioritization. These are two additions to vulnerability scoring, which provide some predictive capability and decision support. The first is EPSS(Exploit Prediction Scoring System), an exploit prediction scoring system, which is a data-driven effort to estimate the probability that a software vulnerability will be exploited within 30 days. The second is SSVC (Stakeholder-Specific Vulnerability Categorization), a stakeholder-specific vulnerability categorization, which is a decision tree system for prioritizing actions during vulnerability management.

The OT era at CVSS/span>

One of the most notable additions to this version is the new focus on OT (Operation Technology) through Safety metrics and values. Today, many vulnerabilities have impacts outside the traditional C/I/A triad of logical impact. Increasingly common is the concern that while logical impacts may or may not be recognized in a vulnerable or affected system, tangible harm to humans is possible because of exploiting a vulnerability. The IoT, ICS (Industrial Control Systems) and healthcare sectors are very concerned about being able to identify this type of impact to help drive prioritization of issues aligned with their growing concerns.

For this, both consumer-supplied and vendor-supplied environmental security values are analyzed. In the first case, where a system has no intended use or purpose directly aligned with safety but may have safety implications in a matter of how or where it is deployed, it is possible that exploitation of a vulnerability within that system may have physical and human security impact that can be represented in the environmental metrics group. This value measures the impact on a human actor or participant that may be injured because of a vulnerability. Unlike other impact metric values, safety can only be associated with the "Impact Subsequent Systems" set and must be considered in addition to the impact values for the Availability and Integrity metrics. In the "Modified Subsequent System Integrity: Safety" (MSI:S), the exploitation compromises the integrity of the vulnerable system (e.g., change in dosage of a medical device) resulting in an impact to human health and safety. In "Modified Subsequent System Availability: Safety" (MSA:S), the exploitation compromises the availability of the vulnerable system (e.g., unavailability of a vehicle's braking system) resulting in an impact to human health and safety.

On the other hand, we have the supplementary security provided by the supplier, which applies when a system has an intended use aligned to safety, so it is possible that the exploitation of a vulnerability may have an impact on safety, which can be represented in the group of supplementary metrics. Its possible values are: Present (P) when the consequences of the vulnerability meet the IEC 61.508 consequence categories definition of "marginal", "critical" or "catastrophic"; Negligible (N), when the consequences of the vulnerability meet the same standard's consequence category definition for "negligible"; and Undefined (X) when the value has not been defined for that vulnerability. It should be noted that suppliers are not required to provide supplemental metrics, and they may be provided as needed, based solely on what the supplier chooses to report on a case-by-case basis.

The system formula

The mathematics behind the calculation in the CVSS system was long criticized until version 3.1, so one of the most interesting aspects is the development of a new scoring system, which consisted of a relatively complex process compared to the (more dubious) way it had been done previously. The process began by taking the 15 million CVE-BTE vectors in 270 equivalence sets, asking experts to compare the vectors representing each, calculating an order of vectors from least severe to most severe, and again asking for expert opinion to decide which group represents the boundary between qualitative severity scores to be compatible with the qualitative severity score boundaries of CVSS v3.x. We then compressed the vector groups in each qualitative severity interval into that interval's score (e.g., 9 to 10 for critical, 7 to 8.9 for high, etc.) and created a modification factor that adjusts the scores within a vector group so that a change in any metric value results in a score change. The intent is for the score change to be no greater than the uncertainty in the ranking of the vector groups as collected from the comparison data.

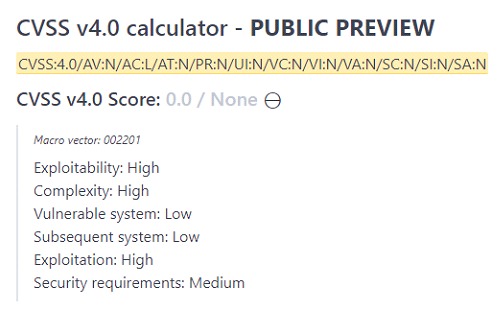

As in previous versions, an online calculator is available to facilitate the visualization of the attributes of each group of metrics, and also serves as a didactic resource for designing simulated scenarios that need to be quantified.

Vulnerabilities versus risks

Another issue that has been raised as a drawback in the potentially lightweight or decontextualized application of CVSS is the technical severity versus risk. On the one hand, CVSS Base (CVSS-B) scores represent severity at the technical level, only takes into account the attributes of the vulnerability itself and are not recommended to be used on its own to determine remediation priority. On the other hand, risk can be analyzed with the full CVSS-BTE attributes (base score, associated threat, and environmental controls) so that if used correctly, these scores can more accurately represent the set of attributes for risk scores, even more so than some methodologies.

Finally, it is worth noting that FIRST recommends a series of best practices for adequate use of CVSS. First, use sources and databases to automate the enrichment of vulnerability information, such as NVD (National Vulnerability Database) for baseline metrics, asset database for environmental metrics, and threat intelligence for threat metrics. It also proposes to find ways to view vulnerability information according to important attributes and viewpoints, such as from support teams, critical applications, internal versus external view, business units, or regulatory requirements.

Conclusions

CVSS version 4 brings with it a very high level of maturity in relation to its predecessors. If we had wanted to reach this same point at a much earlier time, it might not have been a valid option, since communities, organizations, and professionals had to grow with the versions, and along with the requirements of the industry. FIRST's responsibility in publishing this type of work is inordinately large considering the multiple environments in which the scoring system is used, and despite the criticisms of previous versions, they always managed to do a job adequate to the need of the moment.