By the end of 2023, five unique vulnerabilities were disclosed in "Ray", an open-source framework used primarily in AI-like workloads.

Following the news, Anyscale (developers of Ray) posted details on their blog to address the vulnerabilities, clarify the chain of events and detail how each CVE was addressed. While four of the reported vulnerabilities were fixed in a later version of Ray (2.8.1), the fifth CVE (CVE-2023-48022) is still in dispute, meaning it was not considered a risk and has not been addressed with an immediate fix.

Because of this, many development teams (and most static scanning tools) are unaware of the risks of this vulnerability. Some may have overlooked this section of Ray's documentation, and may not be aware of this feature.

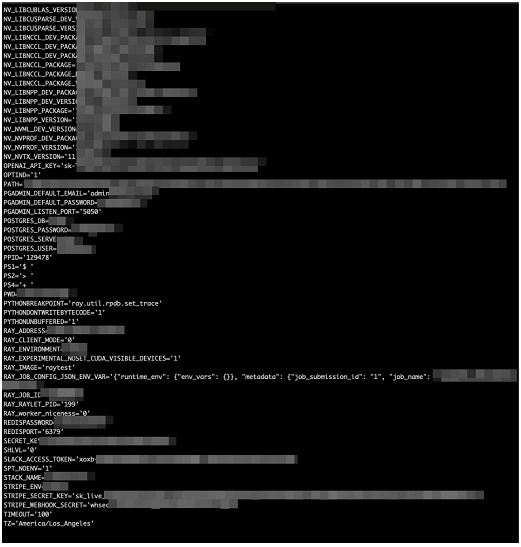

As in such cases, thousands of publicly exposed servers around the world have been found to have this vulnerability, dubbed ShadowRay. Some of the affected machines have been compromised for at least 7 months. Many of the machines included command history, making it easy for attackers to understand what resides on the current machine and to leak sensitive production secrets that were used in previous commands.

Defining Ray

Ray is a unified framework for scaling AI and Python applications for a variety of purposes. In simple terms, it consists of an execution engine known as "Ray Core" and a set of complementary AI libraries.

As of today Ray's project on Github has 30,000 stars and according to Anyscale, some of the largest organizations in the world use it in production, including Uber, Amazon and OpenAI.

Many projects rely on Ray for conventional SaaS, data and AI workloads, leveraging the project for its high levels of scalability, speed and efficiency.

Anyscale maintains the project, which serves as the basis for numerous libraries, including Ray Tune, Ray Serve and more.

The value of AI infrastructure

A typical AI environment contains a wealth of sensitive information, enough to bring down an organization. An ML-OPS (Machine Learning Operations) environment consists of many services that communicate with each other, within the same cluster and between clusters. When used for training or fine-tuning, it generally has access to data sets and models, on disk or in remote storage, such as an S3 container. Often, models or datasets are the unique and private intellectual property that differentiates an organization from its competitors.

In addition, AI environments typically have access to third-party tokens and integrations of many types (HuggingFace, OpenAI, WanDB and other SaaS providers). AI models are now connected to enterprise databases and knowledge networks. AI infrastructure can be a single point of failure for AI-driven enterprises, and a treasure trove for attackers.

And if that wasn't enough, AI models typically run on expensive, high-powered machines, making it a great target for attackers.

Usage of Ray

Models such as GPT-4 comprise billions of parameters, and require massive computational power. These models cannot simply be loaded into a machine's memory. Ray is the technology that allows these models to run. Ray became something of an industry best practice, especially for AI practitioners, who are proficient in Python and often require models to be run and distributed across multiple GPUs and machines.

Some of Ray's capabilities are:

• Enables distributed workloads for training, servicing and tuning of AI models of all architectures and frameworks.

• Requires very low Python proficiency. It has a simple Python API with minimal configuration.

• It is robust and uses best practices to optimize performance, with robust and effortless installation, few dependencies and battle-tested, production-grade code.

• It goes beyond Python, allowing you to run jobs of any kind, including bash commands.

• It is the Swiss army knife for AI developers and practitioners, allowing them to scale their applications effortlessly.

CVE-2023-48022: ShadowRay

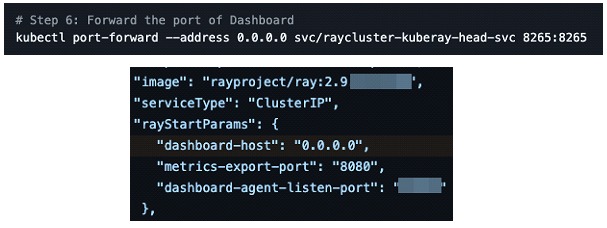

Ray exposes his admin panel and always binds on 0.0.0.0.0 (all network interfaces), along with port forwarding on 0.0.0.0.0, possibly exposing the machine to the Internet by default.

In addition, no authorization of any kind is included in its API The result is that anyone with network access who can reach the control panel (HTTP port 8265) could potentially invoke arbitrary jobs on the remote host, without authorization.

According to Ray's official documentation, security best practices start with the following:

"... Security and isolation must be enforced outside of Ray's Cluster. Ray expects to operate in a secure network environment and act on trusted code. Developers and platform providers must maintain the following invariants to ensure the secure operation of Ray Clusters ..."

Code execution capabilities by design are included, Anyscale believes users should be responsible for their security. The control panel should not be exposed to the Internet, or should only be accessible to trusted parties. Ray lacks authorization based on the assumption that it will operate in a secure environment with proper routing logic: network isolation, Kubernetes namespaces, firewall rules or security groups.

The problem gets more complex when third-party developers are not aware of these weaknesses, for example Ray's official Kubernetes deployment guide and Kuberay's Kubernetes operator suggest teams expose the control panel at 0.0.0.0.0:

ShadowRay Impact

A compromised productive AI environment can mean an attacker affecting the integrity or accuracy of an entire AI model, stealing models or infecting models during the training phase.

Affected organizations come from many industries, including medical companies, video analytics companies, elite educational institutions and many more. A large amount of sensitive information has been leaked through the compromised servers, for example sensitive environment variables, OpenAI credentials, Stripe, Slack and databases.

Conclusions

Typically, AI experts are not security experts, leaving us potentially unaware of the real risks presented by AI frameworks.

Without authorization, the API may be exposed to remote code execution attacks when best practices are not followed. The CVE is labeled as "contested." In these cases, the CVE Program makes no determination as to which part is correct.

Disputed" tags make this type of vulnerability difficult to detect, with many scanners simply ignoring disputed CVEs. Users may not be aware of the risk, even with the most advanced solutions available on the market.

According to Anyscale, this issue is not a vulnerability, but is instead an essential feature for Ray's design, enabling job activation and dynamic code execution within a cluster. Although an authorization feature is recognized as a technical debt that will be addressed in a future release, its implementation is complex and may introduce significant changes. Therefore, Anyscale decided to postpone its addition and disputed CVE-2023-48022.

This approach reflects Anyscale's commitment to maintaining Ray's functionality while prioritizing security enhancements. This decision also underscores the complexity of balancing security and usability in software development, highlighting the importance of careful consideration when implementing changes to critical systems such as Ray and other open source components with network access.