⦁ CCN-CERT: Approach to Artificial Intelligence and Cybersecurity (October 2023)

In this post, we will take a brief look at the best practices report "Approaching Artificial Intelligence and Cybersecurity" published by the CCN-CERT.

The relationship between AI and cybersecurity has been consolidated over the past few years. Initially, cybersecurity systems relied primarily on predefined signatures and rules to detect threats. However, with the rise and evolution of cyber threats, the need for more advanced and adaptive systems has become apparent.

Importance of the Theme

For several years now, cybersecurity has had a scope beyond the technical and has become a global concern that affects institutions, companies and individuals. With the digitalization and interconnection of critical infrastructures, the protection of these systems is essential due to the characteristics of digital transformation.

Artificial intelligence (AI) plays a key role in this context, as it can handle large volumes of data, adapt to constantly evolving threats, automate responses, identify complex patterns, even help address the shortage of cybersecurity professionals.

However, it also poses ethical and regulatory challenges that must be addressed to ensure its fair and responsible use.

Artificial Intelligence

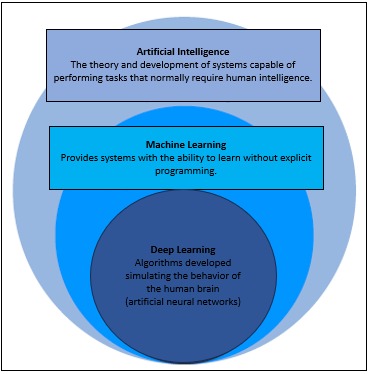

AI has evolved from a theoretical concept to a practical tool with applications in a variety of areas, including cybersecurity. In this context, AI offers advanced capabilities that surpass traditional security methods.

To understand how it benefits cybersecurity, it is crucial to be aware of the various AI techniques, such as machine learning, fuzzy logic, neural networks and generative AI. Each technique has its own advantages and applications in defending against ever-evolving cyber threats. This overview provides a solid foundation for understanding how AI is transforming system and data protection in cybersecurity.s

Machine Learning

A branch of artificial intelligence that focuses on developing algorithms and models that allow computers to learn and make decisions based on data without the need for explicit programming. Machine Learning algorithms can detect patterns, make predictions and make decisions as they are provided with more information.

Deep Learning

It is a subcategory of Machine Learning that relies on multi-layered artificial neural networks (called deep neural networks) to perform high-level machine learning tasks. It is used in applications such as image recognition, natural language processing and computer vision.

Classification Algorithms

These are Machine Learning techniques used to assign data to predefined categories or classes. These algorithms analyze features or attributes of the data and use them to make classification decisions. Common examples of classification algorithms include logistic regression, decision trees and support vector machines (SVM).

Generative AI

Generative AI refers to a set of artificial intelligence techniques and models that are used to autonomously generate data, content or information. This can include the creation of images, text, music or any other type of creative content. A prominent example of Generative AI is the generative adversarial neural network (GAN), which is used to create realistic images and other types of synthetic data.

Each of these branches can be applied to the field of cybersecurity through, for example:

• Threat detection (identifying patterns in large volumes of data)

• Malicious code analysis (or sample creation)

• Fraud detection (e.g. Phishing)

• Adversary Emulation

• Generation of pseudo-real or malicious content (useful for training detection systems)

Generative AI and Cybersecurity

Generative artificial intelligence (AI) has become valuable in a number of fields, including cybersecurity, where it offers both benefits and potential threats.

Among some of the benefits to the field are the ability to generate synthetic data to train intrusion detection systems without compromising privacy, simulating attacks to test the robustness of systems, creating realistic penetration testing scenarios, and reinforcing learning to improve detection and response to threats in real time.

However, it also poses challenges and potential threats that should be approached with caution:

• Vulnerabilities during and after model training: Generative AI models can expose training data to security risks, as it is not always known what data is used and how it is stored.

• Violation of personal data privacy: The lack of regulation regarding data entered into generative models can lead to the use of sensitive data without complying with regulations or obtaining permissions, which could result in the exposure of personal information.

• Intellectual property exposure: Organizations have unintentionally exposed proprietary data to generative models, especially when uploading proprietary items, API keys and sensitive data.

• Jailbreaks and cybersecurity solutions: Users can use jailbreaks to teach generative models to work against their rules, which can lead to sophisticated phishing and malware schemes.

• Malware creation and attacks: Malicious actors can use generative techniques to generate malware that evades traditional detection systems.

• Phishing and deception: Generative AI tools can be used to create fake communications that mimic legitimate ones, increasing the effectiveness of phishing attacks.

• Data manipulation and falsification: Generative techniques can be used to create false log records or manipulate data, making it difficult to detect attacks.

• Deepfakes: The ability to create deepfakes can be used in targeted attacks to deceive employees or executives.

Tips and best practices

Knowing then that the use of generative AI presents, as we have seen, significant challenges, it does not seem out of place to share some tips and best practices in Cybersecurity for the use of generative AI

• Carefully read the security policies of generative AI vendors: It is essential to understand the security policies of generative AI vendors, especially with regard to model training transparency, data removal, traceability, and opt-in and opt-out options.

• Do not enter sensitive data when using generative models: To protect sensitive data, avoid using them in generative models, especially if you are not familiar with their operation. Instead of relying on vendor security protocols, consider creating synthetic copies of data or avoid using these tools with sensitive data.

• (Whenever possible) Keep AI generative models up to date: Generative models receive periodic updates that may include bug fixes and security enhancements. Keep them up to date to ensure their continued effectiveness.

• Train employees on proper use: Employees must understand what data they can use as inputs, how to benefit from generative AI tools, and what the compliance expectations are. In addition, they must comply with the organization's general regulations related to the use of electronic media.

• Use clear data security and governance tools and policies: Consider investing in data loss prevention tools, threat intelligence, cloud-native application protection platform and/or extended detection and response (XDR) to protect your environment.

Reliance on automated solutions

It is extremely valuable to reflect on the over-reliance on automated solutions in cybersecurity, knowing that this can have significant implications and associated risks.

Lack of Interpretability: AI, especially deep learning, is often difficult to understand, raising concerns in cybersecurity, where traceability and understanding are essential.

False sense of security:Implementing AI solutions DOES NOT GUARANTEE complete protection, and relying solely on them can lead to a lack of preparedness to respond to threats.

Threat evolution: Attackers are constantly changing their methods, and if AI solutions are not updated, they can quickly become obsolete.

Attacks against AI: Attackers develop specific techniques to circumvent AI-based systems, which represents a risk if over-reliance is placed on these solutions.

Failures in automation: AI systems are only as good as the data they were trained on, and unrepresentative data can lead to incorrect predictions or failure to detect threats.

Displacement of human judgment: Human expertise remains essential in cybersecurity, as human teams intuitively understand systems and networks.

Maintenance and upgrade cost: Maintaining and upgrading AI systems can require significant investments in time and resources.

By way of conclusion, it can be seen that AI implementations should be seen as a complementary tool in cybersecurity, not as a replacement for traditional methods and techniques.

Combining human expertise with AI and law enforcement is crucial to defend against ever-evolving cyber threats.